Live Demo

See how different AI models render the same mockup

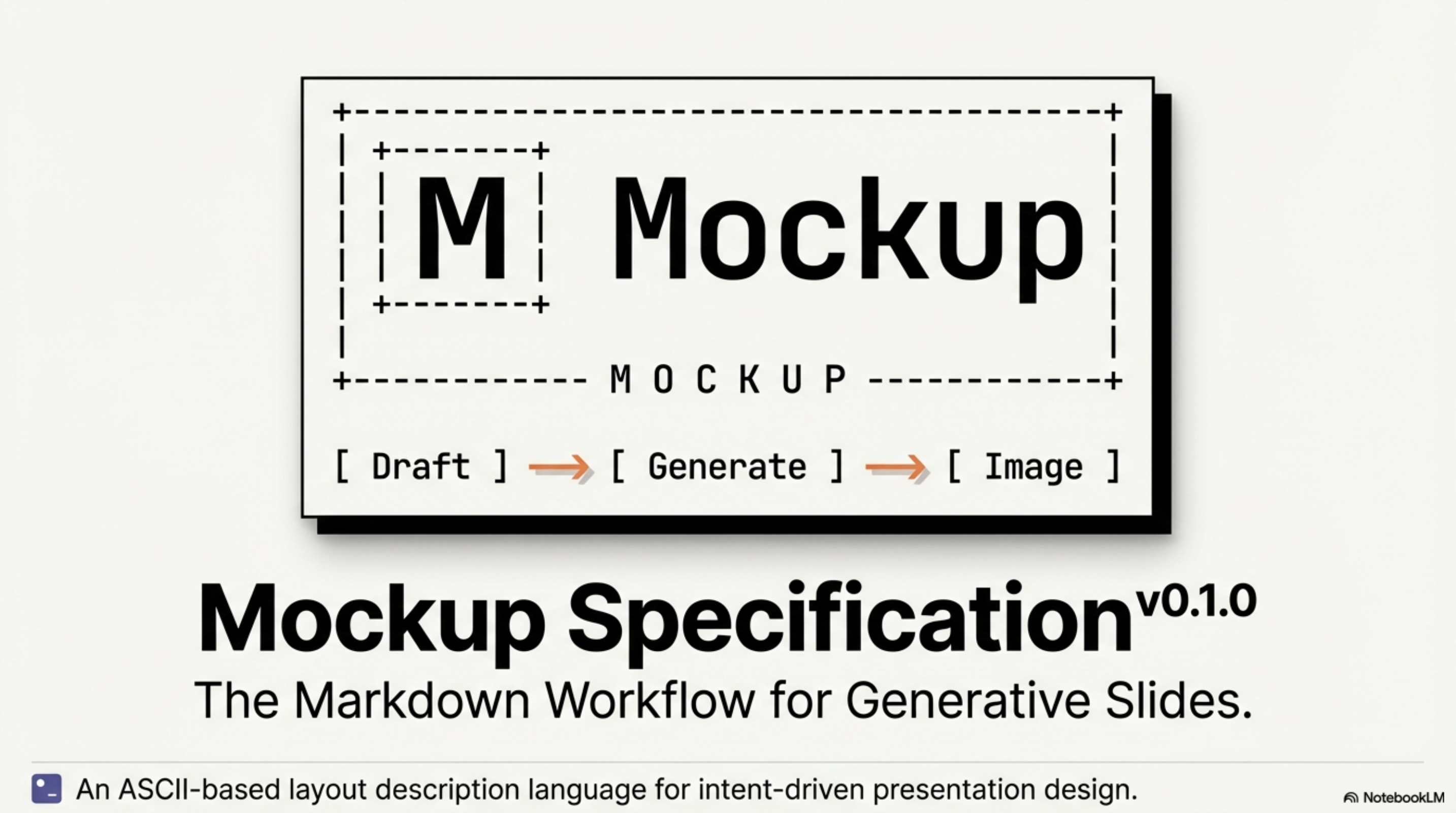

┌────────────────────────────────────────────────────────────────┐

│ │

│ ┌─┐ │

│ │M│ ockup │

│ └─┘ │

│ The Markdown for Slides │

│ │

│ ┌──────────┐ ┌──────────┐ ┌──────────┐ │

│ │ 📝 │ │ 🤖 │ │ 🎨 │ │

│ │ Draft │ ───→ │ Generate │ ───→ │ Image │ │

│ └──────────┘ └──────────┘ └──────────┘ │

│ │

└────────────────────────────────────────────────────────────────┘

> Cover slide with workflow